Let us start at the very beginning by asking, "what is a histogram?" In the most basic sense, a histogram is simply a visual representation showing the results of counting a collection of things. It is a visual summary of how many of each thing there are.

As a concrete example, let us count the letters in the word "Mississippi" to see how many of each letter there are. The letter counts are M:1, I:4, P:2, S:4, A:0, B:0, C:0, and so on, with all other letters in the alphabet having a count of zero. That's it! That is the information that a histogram represents. A histogram simply represents this information in the form of a graph, as we will see below.

Of course, there are several different ways that we could count the letters in the word "Mississippi." We could count the number of vowels (4) versus the number of consonants (7). We could count the number of upper case letters (1) versus the number of lower case letters (10). We could count the number of pen strokes required to write each letter in the word (7 letters require one stroke, 4 letters require two strokes, 0 letters require three strokes, and so on).

There are lots of different ways we can count things. The real question is, "which ways of counting provide us with useful information?" Further, once we are done counting, "how can we summarize this information in a clear and useful way?" This is, of course, where histograms come in.

Since this is a photography article, it will focus on the histograms displayed by your camera and the histograms presented by photo editing software, such as Adobe Photoshop. These histograms count the pixels within a given image, typically counting pixels based on their luminosity or color.

To make things a bit easier to begin with, let us start this article by considering black and white images that only have gray values. That is, images that only have one value (or "channel") per pixel, their brightness or luminosity.

To understand exactly what the histogram of an image is telling us, we first need to understand what is being counted and how it is being counted. For images, histograms typically counting the number of pixels for each possible pixel value. So, to interpret the histogram, we need to understand what pixel values represent.

A two dimensional (2D) image is just a 2D grid of pixels. Each pixel in the grid contains one or more values. For a black and white image, there is a single value that represents the brightness (or luminosity) of that pixel. For color images, there are multiple values, one for each color channel. The value then represents the brightness of that specific color in the pixel. For example, many image file formats use three channels, red, green, and blue (or RGB for short). Color will be covered more in section 6, "Adding in Some Color." For now, let us continue focusing on black and white images, since they only have a single value per pixel.

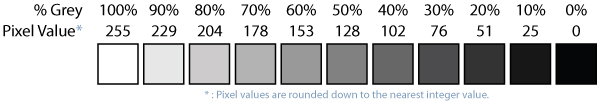

In a black and white (or "grayscale") image, the value of a pixel simply represents how much light there is at that location in the image. A value of 0 (zero) indicates there is no light, and thus the pixel is displayed as pure black. The largest value a pixel can have is 255, so this value is displayed as pure white. "Why use 255," you might ask. That is explained below. All the values between 0 and 255 represent different shades of gray. Figure 1 illustrates how pixel values map to different shades of gray.

Speaking in the most general sense, a histogram is type of chart that divides a set of things into a set of bins and then displays how many things are in each of those bins. It is typically the case that the bins can be ordered somehow. Since this article focuses on photography, we will avoid a general discussion, and focus in on how histograms are used in photography. Just know that they are quite useful in a variety of situations where one wants to summarize a given set of data.

As was stated above, the things being counted in photographs are the pixels. More specifically, the number of pixels (things) containing each possible pixel value (bins) are counted. In essence, this is a way of counting how many pixels there are with a value of 0 (black) there are, how many pixels with a value of 1 there are, and so on.

The most common way this data is displayed for photographs is to create a chart made up of vertical bars, one vertical bar for each possible pixel value. A taller bar means more more pixels have that value. A shorter bar means fewer pixels have that value. These columns are sorted left to right such that 0 (black) is on the left and 255 (white) is on the right. This setup is common in both cameras and photo editing software, such as Adobe Photoshop. See figure 2 for an illustration of this setup.

For illustrative purposes, I have made the vertical bars in figure 2 quite thick so they are easy to see. Because there are 256 possible pixel values (0 to 255), these histograms contain a total of 256 vertical bars. All of those bars need to fit on a camera's display or in area off to the side on a computer display. As a result, individual bars are normally quite thin, as we will see below in figure 3. After all, the difference between two consecutive pixel values does not mean much to us. At a hight level, what we really care about is the distribution of values (dark pixels versus medium pixels versus bright pixels).

Figure 3 (below) contains an example of what histograms look like in an older version of Photoshop. They are basically the same in newer versions of Photoshop, but the exact style may vary between versions. Adobe Photoshop is a well-known and very widely used program for working with digital photographs. Today, it is fair to call it the industry standard. If you are unfamiliar with Adobe Photoshop, I suggest that you read up on it and give it a try. Personally, I have used Photoshop for many years. GIMP is a free, open source alternative that can do many of the same things.

After looking at the histogram in figure 3 for just a moment, there are two important pieces of information that we can see: exposure and clipping. Let us break down and discuss each of these ideas.

The histogram of a photograph is directly related to the exposure of the photograph. Hopefully this idea should be fairly self-evident at this point. Generally speaking, an under exposed photograph tends to be dark, and thus has more pixels with lower pixel values. This means more pixels are on the left side of the histogram. Conversely, an over exposed photograph tends to be very bright, containing many pixels with high pixel values. This means more pixels are on the right side of the histogram.

A photographer capturing the photograph in figure 3 can use the histogram on the back of their camera to tell if the photograph is properly exposed. In this case, the great majority of pixels are in the dark and medium regions, with very few pixels in the bright region. Considering this image was taken in the middle of the day, that suggests the photo may be underexposed (despite the obvious cloud cover). This information can then be used to make exposure adjustments, such as decreasing the shutter speed, and then trying again with the adjusted settings.

Of course, this is an over simplification, and practice is certainly required. Understanding the exposure of a photograph is more complicated than simply seeing where most of the image's pixels are positioned in the histogram. And as always, a photographer's own personal aesthetics will play a role as well. Think of the histogram as a useful tool that a photographer can use to determine if they have achieved the exposure they desire.

For example, a high contrast portrait with a very dark background will obviously have many dark pixels making up that dark background. Since the photographer desires a dark background, they will probably expect the number of dark pixels in the resulting histogram to be higher than normal. This is a case where many dark pixels does not necessarily mean the photo is under exposed. This will be explored more in section 2, "Understanding what a histogram is NOT telling you."

You may be asking yourself, "why not just look at the preview of the photo itself to see how well exposed the photo is?" Using the histogram is particularly useful when reviewing the exposure of a photo using a camera's LCD screen. The LCD displays on the back of cameras emit light. This can make the photos displayed on them look brighter than they actually are. In some cases, the brightness of the LCD can be adjusted, which will obviously affect how bright the photo appears to be.

Similarly, the same photo will look different depending how bright the surrounding light is relative to the LCD screen. The LCD will look particularly bright if the camera is in a dark room. A wedding photographer may find themselves in a dimly lit reception hall asking, "is this photo actually bright enough, or does it just look that way because my LCD is the brightest light in this room?" Conversely, an LCD will probably look relatively dim on a bright sunny day. A landscape photographer may ask themselves, "is this photograph too dark, or does it just look dark because there is a lot of glare from the sun on my LCD screen?"

These problems can be avoided by using the histogram. The histogram is not affected by the brightness of the camera's LCD screen or the level of ambient light in the environment. The histogram is a true measurement of the resulting photograph. This is not to say the preview can or should be ignored. That is certainly not the case for many reasons. However, the histogram can be a very useful tool for evaluating the quality of a photograph on location as it is being taken. The same is true when editing the photograph later, during post-processing.

When a digital camera is used to take a photograph, the sensor converts the light that reaches it into an electrical signal. This is what people mean when they say the sensor measures the amount of light entering the camera. As more light reaches a given pixel on the camera's sensor, the electrical signal becomes stronger, and thus the greater pixel value will be. The opposite is also true. A lower level of light produces a weaker electrical signal and thus a lower pixel value. The same is true for film, except that a chemical reaction occurs rather than the generation of an electrical signal.

The range of light that can be detected by a given sensor is limited and fixed. Meaning, there is a certain range of light that a camera's sensor can effectively detect. This is why exposure settings on a camera are used to change the amount of light reaching the sensor. In other words, the photographer adjusts the exposure settings in order to control the intensity of the light reaching the sensor. The goal being to boost or limit the amount of light reaching the sensor so that it falls within the range of intensities (values) that the sensor can effectively detect.

When too much light reaches the sensor, the electrical signal reaches a limit, and thus the pixel value is maxed out, or clipped to the brightest possible value. When too little light reaches the sensor, the electrical signal is not strong enough to register a value for the pixel, so the value is clipped at the minimum pixel value of 0.

The idea if clipping to zero may seem a bit odd at first. After all, zero means no light at all. How can there be less than no light at all? In this case, it is more about reaching a minimum threshold before the sensor can register a value greater than zero. This situation is analogous to eyes. Imagine a human and a cat are both sitting in a very dark room. A human's retina (their sensor) requires some minimum amount of light to see. If there is some light, but not enough light, the room just looks completely black to the human (i.e. values are clipped to zero). Meanwhile, the cat's retina is more sensitive. What looks completely black to the human, is visible to the cat. In both cases, an eye's iris is analogous to the aperture setting on a camera. The wider it opens, the more light reaches the retina.

This clipping effect can be seen in the histogram presented in figure 3. Notice that the right most column (the brightest pixel value) is noticeably higher than the columns next to it. This jump in the number of pixels at the extreme right side of the histogram is an indication that several pixels were clipped or maxed out at 255. Decreasing the exposure would allow the pixels that were brighter than the sensor could handle to fall within the range. The same is also true on the dark end of the histogram. The left most column (the darkest pixel value) is also noticeably taller than the columns next to it. This is an indication that some pixels are actually darker than the sensor could detect, and thus were clipped to value of 0.

Whenever clipping occurs, detail is being lost. If an area of the photograph is clipped to one side of the range or the other, it means all those pixels are being forced to the same value. The pixels in that area all have the same pixel value and thus all look the same. The area looks flat (i.e. an area of pure white or pure black). If this happens with just a few pixels here and there in a photograph, it is typically not a big deal and can safely be ignored. However, if large areas of an image are being clipped, the exposure will need to be adjusted accordingly.

The limited range of sensors and the resulting clipping that occurs has led photographers to use techniques such as high dynamic range (HDR) photography. HDR is used to combine multiple photographs with different exposure levels into a single composite photo such that details in both light and dark areas of the scene are visible. A discussion of HDR goes beyond the scope of this article. However, clipping can be an indication that using HDR techniques might be warranted in a given lighting situation. In the case of figure 3, the clipping is occuring on both sides of the histogram, meaning one exposure cannot cover the full range of light in the scene. Multiple exposures would be required.

Noise occurs whenever the measured value of a pixel is not true to the level of light reaching that pixel. For example, the true value for a given pixel might be 100, but noise may cause the value actually measured to be 97 or 104. Factors that can increase noise in a camera sensor include, but are not limited to, electrical noise inherent to the sensor, increasing the ISO setting, and extending the exposure time.

The actual thing a sensor is trying to measure is referred to as the signal. In the case of camera sensors, the signal is the amount of light reaching the sensor. Noise is essentially the amount of error in the measurement of that signal. Good sensors have a high signal-to-noise ratio (SNR). That is, the amount of noise introduced by the sensor is relatively small when compared to the signal. Amplifying the signal to boost brightness results in less noise being visibile within the resulting photograph.

In cases where the level of noise is fixed, regardless of a signal's strength, that noise has a relatively stronger effect on weaker signals. In photography, this means the darker pixels are affected more. Have you ever noticed that darker areas within photographs seem to have more noise in them relative to the brigher areas?

In terms of histograms, the effect is almost as if a "blurring" effect were applied to the histogram. The noise shifts around where a given pixel ends up in the histogram. Let us take an example from astro photography to illustrate.

If you have ever attempted astro photography, you may have heard of "dark frames." Astro photography requires taking images of very faint light levels in the night sky. Long exposure times and higher ISO settings tend to be used. As such, noise is a tremendous problem. Techniques are used to reduce the amount of noise relative to the signal (i.e. to increase the signal to noise ratio). Dark frames are photos taken while the lens cap is still on the lens. The purpose is to measure the baseline noise within the sensor when no light is actually reaching it. If you have never given this a try, you might expect the resulting photo to be completely black. That is, all pixel values should be zero since no light reaches them. However, this is not the case.

Rather than having a histogram with all pixels in the left-most column (0), the pixels are actually spread out across several of the left-most columns. The number of columns they are spread out over is determined by the amount of noise. The more noise, the more spread out they are. This is an example of the "blurring" effect noise has on the histogram. Give this a try to see what it looks like for your camera.

Noise can affect all the pixels in a photograph, not just those that would be zero. Given an example where one expects that all the pixel values should be zero just demonstrates the point fairly clearly. All the pixels in an image are affected by noise. Take a photo with the ISO at the maximum setting for your camera to see this in action.

If you are not familiar with computer systems, using 255 as the maximum pixel value may seem a bit arbitrary. If you are familiar with computer systems, you may recognize this If you are familiar with computer systems, you may already know the answer to this question, because the answer is based in how computers represent data. Computers use binary, or base 2, numbers to encode integer values.

At this point, you may have alreaded asked why are pixel values range from 0 to 255. From the point of view of a photographer, this really does not make a whole lot of sense and probably feels a bit arbitrary. However, if you are familiar with how computers represent data, you may recognize this range as being the number of unique values that can be represented using a single byte of memory.

Computers use "little switches" called transistors that can either be on or off. Data contained within computers is encoded as a series of ones (on) and zeros (off) so that the computer can process, store, and otherwise manipulate the data. Each one of these 1s and 0s is refered to as a bit. A collection of eight bits is called a byte.

Many common image formats encode a single pixel value using a byte of memory. A collection of eight bits can be used to encode 256 unique values (0 - 255) using binary, or base 2, numbers. Some image encodings support more bits per pixel value, using either 16 bits (2 bytes) or 32 bits (4 bytes). This allows those format to encode more unique values for each pixel value.

If you are interested in learning more, start with the Wikipedia article on "bytes."